CONTENTS

1.1 Digital Computers and Digital Systems

1.1.1 Applications and types of microprocessors

1.2.2.1 Diminished Radix Complement

1.2.2.3 Subtraction with Complements

1.2.9 Real Numbers - Floating Point

2.2 Converting Analogue Signals

3 Introduction to Digital Signal Processors

3.1 How DSPs are Different from Other Microprocessors

3.2 Characteristics of DSP processors

3.2.3 Input and Output Interfaces

3.2.5 Data formats in DSP processors

3.2.6 Precision and dynamic range

4.1 Features for Reducing Memory Access Requirements

4.4 External Memory Interfaces

4.5 Multiprocessor Support in External Memory Interfaces

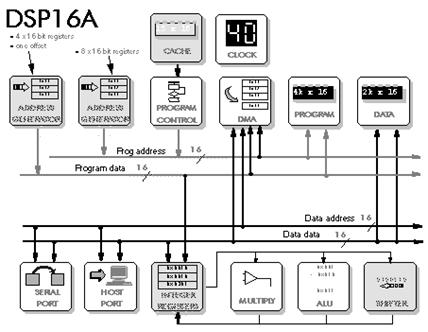

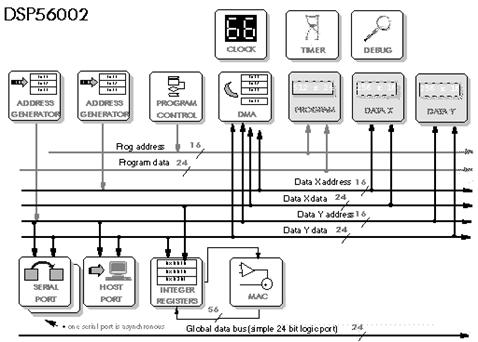

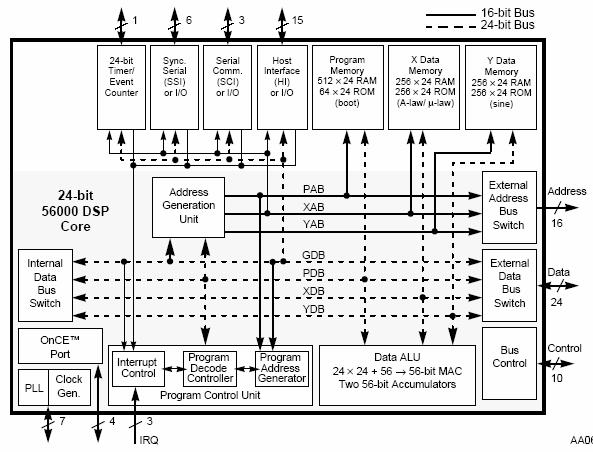

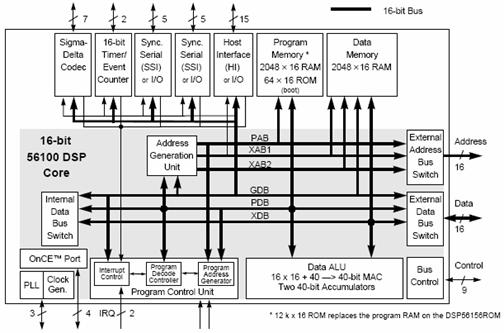

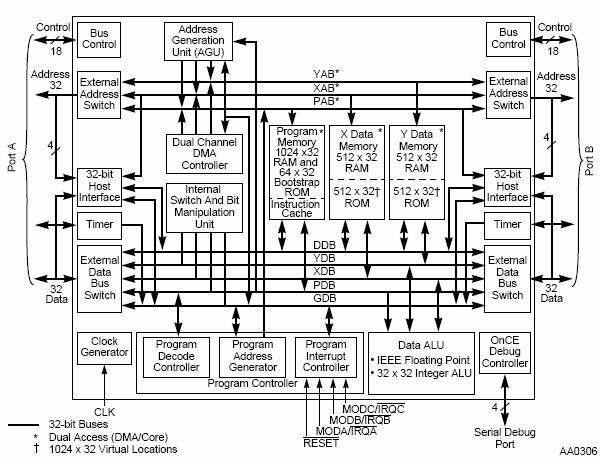

5.4 DSP56002 – DSP56156 – DSP96002

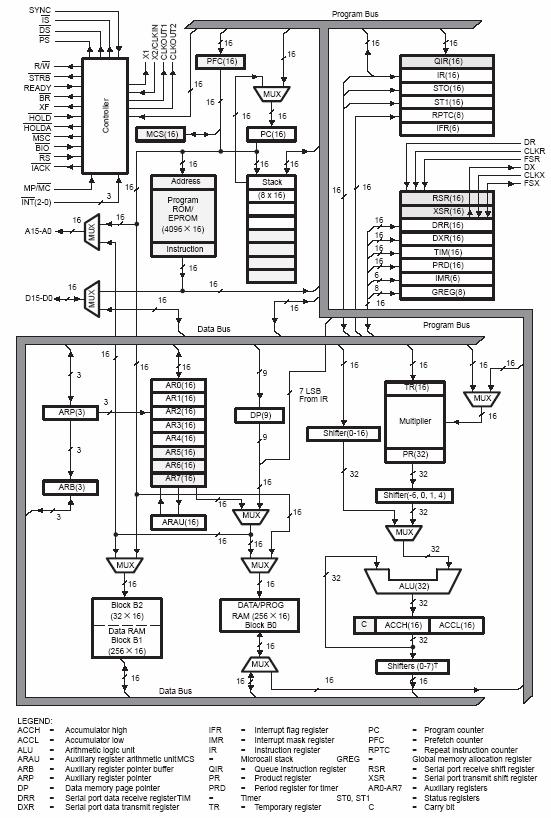

5.5 TMS320C25 - TMS320C50 - TMS320C30 - TMS320C40

6 Choosing the Right DSP Processor

6.7 Power Consumption and Management

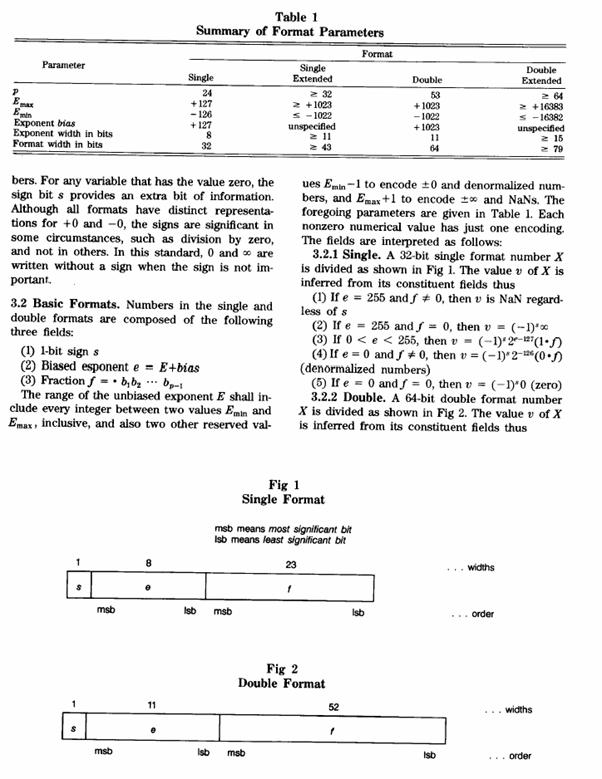

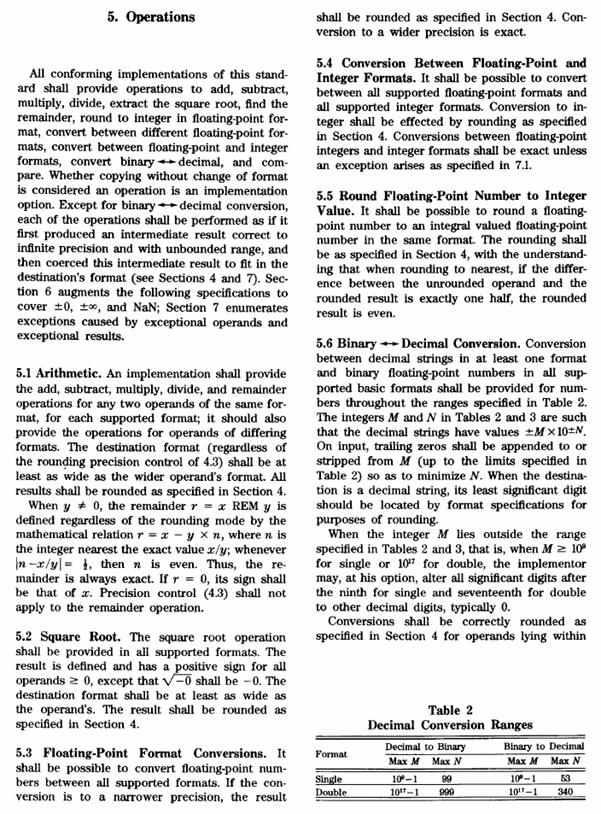

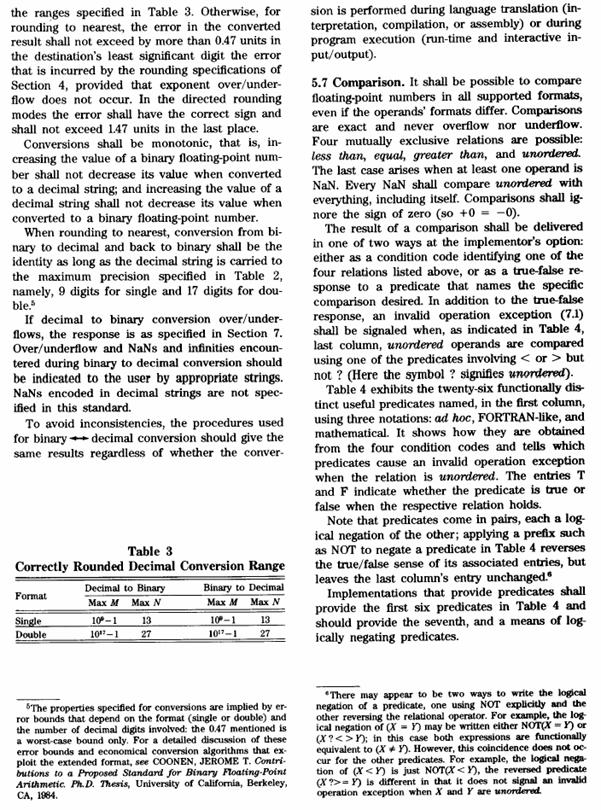

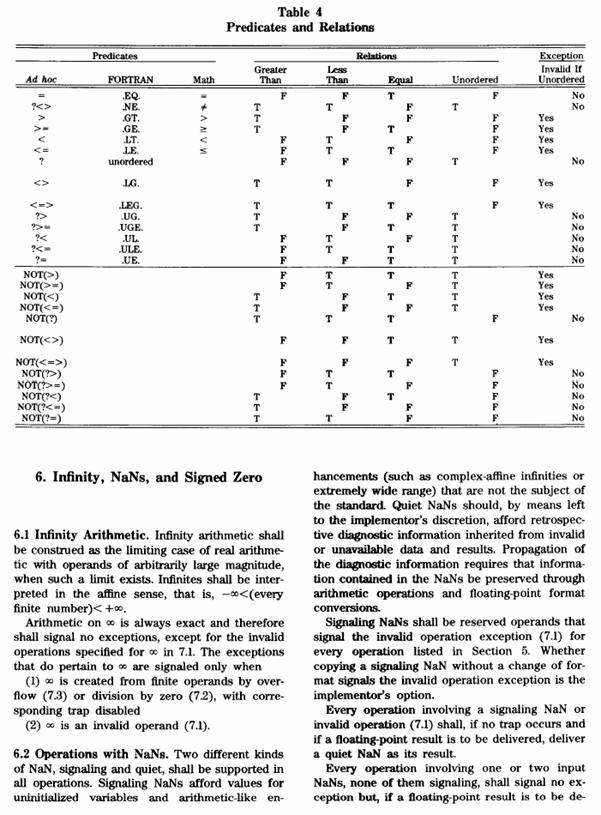

APPENDIX A - IEEE Standard for Binary Floating Point Arithmetic

APPENDIX B - IEEE Standard for Radix-Independent Floating Point Arithmetic

APPENDIX C – Calculation of Emax and bias

1 Binary Systems

1.1 Digital Computers and Digital Systems

Digital computers have made possible many scientific, industrial, and commercial advances that would have been unattainable otherwise. Our space program would have been impossible without real-time, continuous computer monitoring, and many business enterprises function efficiently only with the aid of automatic data processing. Computers are used in scientific calculations, commercial and business data processing, air traffic control, space guidance, the educational field, and many other areas. The most striking property of a digital computer is its generality; it can follow a sequence of instructions, called a program that operates on given data. The user can specify and change programs and/or data according to the specific need. As a result of this flexibility, general-purpose digital computers can perform a wide variety of information-processing tasks.

The general-purpose digital computer is the best-known example of a digital system. Other examples include telephone switching exchanges, digital voltmeters, digital counters, electronic calculators, and digital displays. Characteristic of a digital system is its manipulation of discrete elements of information. Such discrete elements may be electric impulses, the decimal digits, the letters of an alphabet, arithmetic operations, punctuation marks, or any other set of meaningful symbols. The juxtaposition of discrete elements of information represents a quantity of information. For example, the letters d, o, and g form the word dog. The digits 237 form a number. Thus, a sequence of discrete elements forms a language, that is, a discipline that conveys information. Early digital computers were used mostly for numerical computations. In this case, the discrete elements used are the digits. From this application, the term digital computer has emerged. A more appropriate name for a digital computer would be a “discrete information-processing system.”

Discrete elements of information are represented in a digital system by physical quantities called signals. Electrical signals such as voltages and currents are the most common. The signals in all present-day electronic digital systems have only two discrete values and are said to be binary. The digital-system designer is restricted to the use of binary signals because of the lower reliability of many-valued electronic circuits. In other words, a circuit with ten states, using one discrete voltage value for each state, can be designed, but it would possess a very low reliability of operation. In contrast, a transistor circuit that is either on or off has two possible signal values and can be constructed to be extremely reliable. Because of this physical restriction of components, and because human logic tends to be binary, digital systems that are constrained to take discrete values are further constrained to take binary values.

Discrete quantities of information arise either from the nature of the process or may be quantized from a continuous process. For example, a payroll schedule is an inherently discrete process that contains employee names, social security numbers, weekly salaries, income taxes, etc. An employee’s paycheck is processed using discrete data values such as letters of the alphabet (names), digits (salary), and special symbols such as $. On the other hand, a research scientist may observe continuous process but record only specific quantities in tabular form. The scientist is thus quantizing his continuous data. Each number in his table is a discrete element of information.

Many physical systems can be described mathematically by differential equations whose solutions as a function of time give the complete mathematical behavior of the process. An analog computer performs a direct simulation of a physical system. Each section of the computer is the analog of some particular portion of the process under study. The variables in the analog computer are represented by continuous signals, usually electric voltages that vary with time. The signal variables are considered analogous to those of the process and behave in the same manner. Thus, measurements of the analog voltage can be substituted for variables of the process. The term analog signal is sometimes substituted for continuous signal because “analog computer” has come to mean a computer that manipulates continuous variables.

To simulate a physical process in a digital computer, the quantities must be quantized. When the variables of the process are presented by real-time continuous signals, the latter are quantized by an analog-to-digital conversion device. A physical system whose behavior is described by mathematical equations is simulated in a digital computer by means of numerical methods. When the problem to be processed is inherently discrete, as in commercial applications, the digital computer manipulates the variables in their natural form.

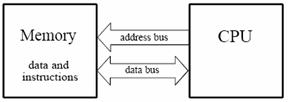

The memory unit of a digital computer stores programs as well as input, output, and intermediate data. The processor unit performs arithmetic and other data-processing tasks as specified by a program. The control unit supervises the flow of information between the various units. The control unit retrieves the instructions, one by one, from the program that is stored in memory. For each instruction, the control unit informs the processor to execute the operation specified by the instruction. Both program and data are stored in memory. The control unit supervises the program instructions, and the processor manipulates the data as specified by the program.

The program and data prepared by the user are transferred into the memory unit by means of an input device such as a keyboard. An output device, such as a printer, receives the result of the computations and the printed results are presented to the user. The input and output devices are special digital systems driven by electromechanical parts and controlled by electronic digital circuits.

An electronic calculator is a digital system similar to a digital computer, with the input device being a keyboard and the output device a numerical display. Instructions are entered in the calculator by means of the function keys, such as plus and minus. Data are entered through the numeric keys. Results are displayed directly in numeric form. Some calculators come close to resembling a digital computer by having printing capabilities and programmable facilities. A digital computer, however, is a more powerful device than a calculator. A digital computer can accommodate many other input and output devices; it can perform not only arithmetic computations, but logical operations as well and can be programmed to make decisions based on internal and external conditions.

It has already been mentioned that a digital computer manipulates discrete elements of information and that these elements are represented in the binary form. Operands used for calculations may be expressed in the binary number system. Other discrete elements, including the decimal digits, are represented in binary codes. Data processing is carried out by means of binary logic elements using binary signals. Quantities are stored in binary storage elements.

1.1.1 Applications and types of microprocessors

Today, microprocessors are found in two major application areas:

• Computer system applications

• Embedded system applications

Embedded systems are often high-volume applications for which manufacturing cost is a key factor. More and more embedded systems are mobile battery-operated systems. For such systems power consumption (battery time) and size are also critical factors. Because they are specifically designed to support a single application, embedded systems only integrate the hardware required to support this application. They often have simpler architectures than computer systems. On the other hand, they often have to perform operations with timings that are much more critical than in computer systems.

A cellular phone for instance must compress the speech in real time; otherwise, the process will produce audible noise. They must also perform with very high reliability. A software crash would be unacceptable in an ABS brake application. Digital Signal Processing applications are often viewed as a third category of microprocessor applications because they use specialized CPUs called DSPs. However, in reality they qualify as specialized embedded applications.

Today, there are 3 different types of microprocessors optimized to be used in each application area:

• Computer systems: General-purpose microprocessors.

• Embedded applications: Microcontrollers

• Signal processing applications: Digital Signal Processors (DSPs)

In reality, the boundaries of application areas are not as well defined as they seem. For instance, DSPs can be used in applications requiring a high computational speed, but not necessarily related to signal processing. Such applications include computer video boards and specialized co-processor boards designed for intensive scientific computation. On the other hand, powerful general-purpose microprocessors are used in high-end digital signal processing equipment designed for algorithm development and rapid prototyping. The following sections list the typical features and application areas for the three types of microprocessors.

General purpose microprocessors

Applications: Computer systems

Manufacturers and models: Intel-Pentium, Motorola-PowerPC, Digital-Alpha Chip, LSI Logic-SPARC family (SUN), ... etc.

Typical features

• Wide address bus allowing the management of large memory spaces

• Integrated hardware memory management unit

• Wide data formats (32 bits or more)

• Integrated co-processor, or Arithmetic Logic Unit supporting complex numerical

operations, such as floating point multiplications.

• Sophisticated addressing modes to efficiently support high-level language functions.

• Large silicon area

• High cost

• High power consumption

Embedded systems: Microcontrollers

Application examples: Television set, wristwatches, TV/VCR remote control, home appliances, musical cards, electronic fuel injection, ABS brakes, hard disk drive, computer mouse / keyboard, USB controller, computer printer, photocopy machine, ... etc.

Manufacturers and models: Motorola-68HC11, Intel-8751, Microchip-PIC16/17family, Cypress-PsoC family, … etc.

Typical features

• Memory and peripherals integrated on the chip

• Narrow address bus allowing only limited amounts of memory.

• Narrow data formats (8 bits or 16 bits typical)

• No coprocessor, limited Arithmetic-Logic Unit.

• Limited addressing modes (High-level language programming is often inefficient)

• Small silicon area

• Low cost

• Low power consumption.

Signal processing: DSPs

Application examples: Telecommunication systems, control systems, altitude, and flight control systems in aerospace applications, audio/video recording, and play-back (compact-disk/MP3 players, video cameras…etc.), high-performance hard-disk drives, modems, video boards, noise cancellation systems, … etc.

Manufacturers and models: Texas Instruments TMS320C6000, TMS320C5000, Motorola 56000, 96000, Analog devices ADSP2100, ADSP21000, ... etc.

Typical features

• Fixed-point processor (TMS320C5000, 56000...) or floating point processor

(TMS320C67, 96000...)

• Architecture optimized for intensive computation. For instance the TMS320C67 can

do 1000 Million floating point operations a second (1 GIGA Flop).

• Narrow address bus supporting a only limited amounts of memory.

• Specialized addressing modes to efficiently support signal processing operations

(circular addressing for filters, bit-reverse addressing for Fast Fourier

Transforms…etc.)

• Narrow data formats (16 bits or 32 bits typical).

• Many specialized peripherals integrated on the chip (serial ports, memory,

timers…etc.)

• Low power consumption.

• Low cost.

1.2 Binary Numbers

A decimal number such as 7392 represents a quantity equal to 7 thousands plus 3 hundreds, plus 9 tens, plus 2 units. The thousands, hundreds, etc. are powers of 10 implied by the position of the coefficients. To be more exact, 7392 should be written as

7 ´ 103 + 3 ´ 102 + 9 ´ 101 + 2 ´ 100

However, the convention is to write only the coefficients and from their position deduce the necessary powers of 10. In general, a number with a decimal point is represented by a series of coefficients as follows:

a5 a4 a3 a2 al a0 . a-1 a-2 a-3

The aj coefficients are one of the ten digits (0, 1, 2, … , 9), and the subscript value j gives the place value and, hence, the power of 10 by which the coefficient must be multiplied.

105a5 + 104a4 + 103a3 + l02a2 + l01a1 + l00a0 + 10-1a-1 + 10-2a-2 + 10-3a-3

The decimal number system is said to be of base, or radix, 10 because it uses ten digits and the coefficients are multiplied by powers of 10. In the binary system, the coefficients of the binary numbers system have two possible values: 0 and 1. Each coefficient a, is multiplied by 2’. For example, the decimal equivalent of the binary number 11010.11 is 26.75, as shown from the multiplication of the coefficients by powers of 2:

1 ´ 24 + 1 ´ 23 + 0 ´ 22 + 1 ´ 21 + 0 ´ 20 + 1 ´ 2-1 + 1 ´ 2-2 = 26.75

In general, a number expressed in base-r system has coefficients multiplied by powers of r:

an . rn + an-1 . rn-1 + … + a2 . r2 + a1 . r + a0 + a-1 . r-1 + a-2 . r-2 + … + a-m . r-m

The coefficients aj range in value from 0 to r-1. To distinguish between numbers of different bases, we enclose the coefficients in parentheses and write a subscript equal to the base used (except sometimes for decimal numbers, where the content makes it obvious that it is decimal).

1.2.1 Arithmetic Operations

Arithmetic operations with numbers in base r follow the same rules as for decimal numbers. When other than the familiar base 10 is used, one must be careful to use only the r allowable digits. Examples of addition, subtraction, and multiplication of two binary numbers are as follows:

Figure 1‑1: Binary arithmetic operations.

The sum of two binary numbers is calculated by the same rules as in decimal, except that the digits of the sum in any significant position can be only 0 or 1. Any carry obtained in a given significant position is used by the pair of digits one significant position higher. The subtraction is slightly more complicated. The rules are still the same as in decimal, except that the borrow in a given significant position adds 2 to a minuend digit. (A borrow in the decimal system adds 10 to a minuend digit.) Multiplication is very simple. The multiplier digits are always 1 or 0. Therefore, the partial products are equal either to the multiplicand or to 0.

1.2.2 Complements

Complements are used in digital computers for simplifying the subtraction operation and for logical manipulation. There are two types of complements for each base-r system: the radix complement and the diminished radix complement. The first is referred to as the r’s complement and the second as the (r-1)‘s complement. When the value of the base r is substituted in the name, the two types are referred to as the 2’s complement and 1’s complement for binary numbers, and the 10’s complement and 9’s complement for decimal numbers.

1.2.2.1 Diminished Radix Complement

Given a number N in base r having n digits, the (r-l)’s complement of N is defined as:

![]()

For decimal numbers, r = 10 and r-1 = 9, so the 9’s complement of N is (10n-1)-N. Now, 10n represents a number that consists of a single 1 followed by n 0’s. 10n-1 is a number represented by n 9’s. For example, if n = 4, we have l04 = 10,000 and l04-1 = 9999. It follows that the 9’s complement of a decimal number is obtained by subtracting each digit from 9. Some numerical examples follow.

The 9’s complement of 546700 is 999999 - 546700 = 453299.

The 9’s complement of 012398 is 999999 – 012398 = 987601.

For binary numbers, r = 2 and r-1 = 1, so the l’s complement of N is (2n-1)-N. Again. 2n is represented by a binary number that consists of a 1 followed by n 0’s. 2 n-1 is a binary number represented by n 1‘s. For example, if n = 4, we have 2 4 = (10000)2 and 24-1 = (1111)2. Thus, the l’s complement of a binary number is obtained by subtracting each digit from 1. However, when subtracting binary digits from 1. we can have either 1-0 = 1 or 1-1=0, which causes the bit to change from 0 to 1 or from 1 to 0. Therefore, the l’s complement of a binary number is formed by changing l’s to 0’s and 0’s to l’s. The following are some numerical examples.

The l’s complement of 1011000 is 0100111.

The l’s complement of 0101101 is 1010010.

1.2.2.2 Radix Complement

The r’s complement of an n-digit number N in base r is defined as rn-N for N ¹ 0 and 0 for N=0. Comparing with the (r-1)’s complement, we note that the r’ s complement is obtained by adding 1 to the (r-1)’s complement since rn-N = [(rn-1)-N ] + 1. Thus, the 10’s complement of decimal 2389 is 7610 + 1=7611 and is obtained by adding 1 to the 9’s-complement value. The 2’s complement of binary 101100 is 010011 + 1 = 010100 and is obtained by adding 1 to the 1’s-complement value.

Since 10n is a number represented by a 1 followed by n 0’s, l0n-N, which is the 10’s complement of N, can be formed also by leaving all least significant 0’s unchanged, subtracting the first nonzero least significant digit from 10, and subtracting all higher significant digits from 9.

The 10’s complement of 012398 is 987602.

The 10’s complement of 246700 is 753300.

The 10’s complement of the first number is obtained by subtracting 8 from 10 in the least significant position and subtracting all other digits from 9. The 10’s complement of the second number is obtained by leaving the two least significant 0’s unchanged, subtracting 7 from 10. and subtracting the other three digits from 9.

Similarly, the 2’s complement can be formed by leaving all least significant 0’s and the first 1 unchanged, and replacing l’s with 0’s and 0’s with l’s in all other higher significant digits.

The 2’s complement of 1101100 is 0010100.

The 2’s complement of 0110111 is 1001001.

The 2’s complement of the first number is obtained by leaving the two least significant 0’s and the first 1 unchanged, and then replacing l’s with 0’s and 0’s with l’s in the other four most-significant digits. The 2’s complement of the second number is obtained by leaving the least significant 1 unchanged and complementing all other digits.

In the previous definitions, it was assumed that the numbers do not have a radix point. If the original number N contains a radix point, the point should be removed temporarily in order to form the r’s or (r-l)’s complement. The radix point is then restored to the complemented number in the same relative position. It is also worth mentioning that the complement of the complement restores the number to its original value. The r’s complement of N is rn-N. The complement of the complement is r-(rn-N)=N, giving back the original number.

1.2.2.3 Subtraction with Complements

The direct method of subtraction taught in elementary schools uses the borrow concept. In this method, we borrow a 1 from a higher significant position when the minuend digit is smaller than the subtrahend digit. This seems to be easiest when people perform subtraction with paper and pencil. When subtraction is implemented with digital hardware, this method is found to be less efficient than the method that uses complements.

The subtraction of two n-digit unsigned numbers M-N in base r can be done as follows:

1. Add the minuend M to the r’s complement of the subtrahend N. This performs M + (rn- N) = M - N + rn.

2. If M ³ N, the sum will produce an end carry, rn, which is discarded; what is left is the result M-N.

3. If M < N, the sum does not produce an end carry and is equal to rn-(N – M), which is the r’s complement of (N - M). To obtain the answer in a familiar form, take the r’s complement of the sum and place a negative sign in front.

The following examples illustrate the procedure.

Example: Using 10’s complement, subtract 72532 - 3250.

Note that M has 5 digits and N has only 4 digits. Both numbers must have the same number of digits; so we can write N as 03250. Taking the 10’s complement of N produces a 9 in the most significant position. The occurrence of the end carry signifies that M - N and the result is positive.

Example: Using 10’s complement, subtract 3250 – 72532.

Note that since 3250 < 72532, the result is negative. Since we are dealing with unsigned numbers, there is really no way to get an unsigned result for this case. When subtracting with complements, the negative answer is recognized from the absence of the end carry and the complemented result. When working with paper and pencil, we can change the answer to a signed negative number in order to put it in a familiar form. Subtraction with complements is done with binary numbers in a similar manner using the same procedure outlined before.

1.2.3 Signed Binary Numbers

Positive integers including zero can be represented as unsigned numbers. However, to represent negative integers, we need a notation for negative values. In ordinary arithmetic, a negative number is indicated by a minus sign and a positive number by a plus sign. Because of hardware limitations, computers must represent everything with binary digits, commonly referred to as bits. It is customary to represent the sign with a bit placed in the leftmost position of the number. The convention is to make the sign bit 0 for positive and 1 for negative.

It is important to realize that both signed and unsigned binary numbers consist of a string of bits when represented in a computer. The user determines whether the number is signed or unsigned. If the binary number is signed, then the leftmost bit represents the sign and the rest of the hits represent the number. If the binary number is assumed to be unsigned, then the leftmost bit is the most significant bit of the number. For example, the string of bits 01001 can be considered as 9 (unsigned binary) or a +9 (signed binary) because the leftmost bit is 0. The string of bits 11001 represent the binary equivalent of 25 when considered as an unsigned number or as -9 when considered as a signed number because of the 1 in the leftmost position, which designates negative, and the other four bits, which represent binary 9. Usually, there is no confusion in identifying the bits if the type of representation for the number is known in advance.

The representation of the signed numbers in the last example is referred to as the signed-magnitude convention. In this notation, the number consists of a magnitude and a symbol (+ or -) or a bit (0 or 1) indicating the sign. This is the representation of signed numbers used in ordinary arithmetic. When arithmetic operations are implemented in a computer, it is more convenient to use a different system for representing negative numbers, referred to as the signed-complement system. In this system, a negative number is indicated by its complement. Whereas the signed-magnitude system negates a number by changing its sign, the signed-complement system negates a number by taking its complement. Since positive numbers always start with 0 (plus) in the leftmost position, the complement will always start with a 1, indicating a negative number. The signed-complement system can use either the 1’s or the 2’s complement, but the 2’s complement is the most common.

As an example, consider the number 9 represented in binary with eight bits. +9 is represented with a sign bit of 0 in the leftmost position followed by the binary equivalent of 9 to give 00001001. Note that all eight bits must have a value and, therefore, 0’s are inserted following the sign bit up to the first 1. Although there is only one way to represent +9, there are three different ways to represent -9 with eight bits:

In

signed-magnitude

representation

: 10001001

In signed-1’s-complement

representation

: 11110110

In signed-2’s-complement representation

: 11110111

In signed-magnitude, -9 is obtained from +9 by changing the sign bit in the leftmost position from 0 to 1. In signed-1’s complement, -9 is obtained by complementing all the bits of +9, including the sign bit. The signed-2’s-complement representation of –9 is obtained by taking the 2’s complement of the positive number, including the sign bit.

The signed-magnitude system is used in ordinary arithmetic, but is awkward when employed in computer arithmetic. Therefore, the signed-complement is normally used. The l’s complement imposes some difficulties and is seldom used for arithmetic operations except in some older computers. The 1’s complement is useful as a logical operation since the change of 1 to 0 or 0 to I is equivalent to a logical complement operation, as will be shown in the next chapter. The following discussion of signed binary arithmetic deals exclusively with the signed-2’s-complement representation of negative numbers. The same procedures can be applied to the signed-i ‘s-complement system by including the end-around carry as done with unsigned numbers.

1.2.4 Arithmetic Addition

The addition of two numbers in the signed-magnitude system follows the rules of ordinary arithmetic. If the signs are the same, we add the two magnitudes and give the sum the common sign. If the signs are different, we subtract the smaller magnitude from the larger and give the result the sign of the larger magnitude. For example, (+25) + (-37)=-(37-25) = -12 and is done by subtracting the smaller magnitude 25 from the larger magnitude 37 and using the sign of 37 for the sign of the result. This is a process that requires the comparison of the signs and the magnitudes and then performing either addition or subtraction. The same procedure applies to binary numbers in signed-magnitude representation. In contrast, the rule for adding numbers in the signed-complement system does not require a comparison or subtraction, but only addition. The procedure is very simple and can be stated as follows for binary numbers.

The addition of two signed binary numbers with negative numbers represented in signed-2’s-complement form is obtained from the addition of the two numbers, including their sign bits. A carry out of the sign-bit position is discarded. Numerical examples for addition follow. Note that negative numbers must be initially in 2’s complement and that the sum obtained after the addition if negative is in 2’s-complement form.

In each of the four cases, the operation performed is addition with the sign bit included. Any carry out of the sign-bit position is discarded, and negative results are automatically in 2’s-complement form.

In order to obtain a correct answer, we must ensure that the result has a sufficient number of bits to accommodate the sum. If we start with two n-bit numbers and the sum occupies n+1 bits, we say that an overflow occurs. When one performs the addition with paper and pencil, an overflow is not a problem since we are not limited by the width of the page. We just add another 0 to a positive number and another 1 to a negative number in the most-significant position to extend them to n + 1 bits and then perform the addition. Overflow is a problem in computers because the number of bits that hold a number is finite, and a result that exceeds the finite value by 1 cannot be accommodated.

The complement form of representing negative numbers is unfamiliar to those used to the signed-magnitude system. To determine the value of a negative number when in signed-2’s complement. it is necessary to convert it to a positive number to place it in a more familiar form. For example, the signed binary number 11111001 is negative because the leftmost bit is 1. Its 2’s complement is 00000111, which is the binary equivalent of +7. We therefore recognize the original negative number to be equal to -7.

1.2.5 Arithmetic Subtraction

Subtraction of two signed binary numbers when negative numbers are in 2’s-complement form is very simple and can be stated as follows: Take the 2’s complement of the subtrahend (including the sign bit) and add-it to the minuend (including the sign bit). A carry out of the sign-bit position is discarded. This procedure occurs because a subtraction operation can be changed to an addition operation if the sign of the subtrahend is changed. This is demonstrated by the following relationship:

(± A)-(+ B) = (± A) + (-B)

(± A)-(- B) = (± A) + (+B)

But changing a positive number to a negative number is easily done by taking its 2’s complement. The reverse is also true because the complement of a negative number in complement form produces the equivalent positive number. Consider the subtraction of (-6) - (-13) = + 7. In binary with eight bits, this is written as (11111010-11110011). The subtraction is changed to addition by taking the 2’s complement of the subtrahend (-13) to give (+13). In binary, this is 11111010 + 00001101 = 100000111. Removing the end carry, we obtain the correct answer 00000111 (+7).

It is worth noting that binary numbers in the signed-complement system are added and subtracted by the same basic addition and subtraction rules as unsigned numbers. Therefore, computers need only one common hardware circuit to handle both types of arithmetic. The user or programmer must interpret the results of such addition or subtraction differently, depending on whether it is assumed that the numbers are signed or unsigned.

1.2.6 Binary Multiplication

Binary multiplication is carried out in very much the same manner as decimal multiplication, obtaining partial products in the conventional manner, but adding the latter in binary fashion. That is, write down the multiplier and the multiplicand, multiply entire multiplicand by each multiplier digit in turn, and add the partial results. In binary multiplication, 0 × 0 = 0, 0 × 1 = 0, 1 × 0 = 0, and 1 × 1 = 1. Example, multiply 10111 (binary 23) by 11001 (binary 25):

Since computers cannot handle blanks, trailing zeros should be filled in where necessary. Note that, every digit in a binary number is either 1 or 0, so every step in multiplication requires either adding or not adding. This process requires the ability to add, and shift left. In a left shift, digits migrate left, most significant bit is lost, least significant bit is set to 0. Multiplication is a repeated addition. Regarding to the multiplication of negative numbers, since addition of negative twos complement numbers works, so multiplication works too.

1.2.7 Binary Division

Binary division is carried out in a manner similar to decimal division, operates symmetrically to multiplication, the difference being that one subtracts instead of adds and shifts right to divide by 2 instead of multiplying by 2, , as the following example will show: Divide 1001 (binary 9) by 0100 (binary 4)

If we assume a fixed length register of and 2’s complement number representation and the divisor and dividend are both assumed to be positive integers, after initialization, first step will be the normalization operation which is: shift the divisor to the left until the leftmost 1 is just to the right of the sign bit while counting the number of shifts. For example:

01101101 dividend (109)

00010101 divisor (21)

00101010 first shift

01010100 second shift

The next step will subtraction and shifting loop. Note that if the subtraction is positive, the quotient has a 1 in the current bit position. The quotient string is built incrementally by adding the result for the current but position to the end of the quotient, and shifting left.

1.2.8 Fixed Point (Integers)

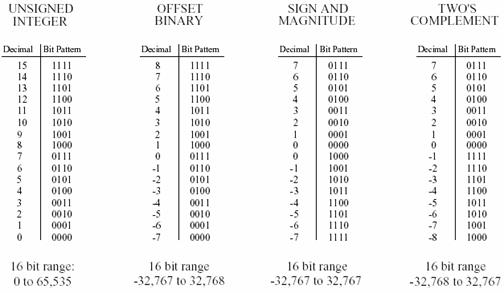

Fixed-point representation is used to store integers, the positive and negative whole numbers. In the simplest case, the 216=65,536 possible bit patterns are assigned to the numbers 0 through 65,535. This is called unsigned integer format, and a simplified example is shown in Figure 1‑2 (using only 4 bits per number). Conversion between the bit pattern and the number being represented is nothing more than changing between base 2 (binary) and base 10 (decimal). The disadvantage of unsigned integer is that negative numbers cannot be represented.

Figure 1‑2: Fixed point binary representations.

Offset binary is similar to unsigned integer, except the decimal values are shifted to allow for negative numbers. In the 4 bit example of Figure 1‑2, the decimal numbers are offset by seven, resulting in the 16 bit patterns corresponding to the integer numbers -7 through 8. In this same manner, a 16 bit representation would use 32,767 as an offset, resulting in a range between -32,767 and 32,768. Offset binary is not a standardized format, and you will find other offsets used, such 32,768. The most important use of offset binary is in ADC and DAC. For example, the input voltage range of -5v to 5v might be mapped to the digital numbers 0 to 4095, for a 12 bit conversion.

Sign and magnitude is another simple way of representing negative integers. The far left bit is called the sign bit, and is made a zero for positive numbers, and a one for negative numbers. The other bits are a standard binary representation of the absolute value of the number. This results in one wasted bit pattern, since there are two representations for zero, 0000 (positive zero) and 1000 (negative zero). This encoding scheme results in 16 bit numbers having a range of -32,767 to 32,767.

These first three representations are conceptually simple, but difficult to implement in hardware. Remember, when A=B+C is entered into a computer program, some hardware engineer had to figure out how to make the bit pattern representing B, combine with the bit pattern representing C, to form the bit pattern representing A. Two's complement is the format loved by hardware engineers, and is how integers are usually represented in computers. To understand the encoding pattern, look first at decimal number zero in Figure 1‑2, which corresponds to a binary zero, 0000. As we count upward, the decimal number is simply the binary equivalent (0 = 0000, 1 = 0001, 2 = 0010, 3 = 0011, etc.). If we again start at 0000 and begin subtracting, the digital hardware automatically counts in two's complement: 0 = 0000, -1 = 1111, -2 = 1110, -3 = 1101, etc. This is analogous to the odometer in a new automobile. If driven forward, it changes: 00000, 00001, 00002, 00003, and so on. When driven backwards, the odometer changes: 00000, 99999, 99998, 99997, etc.

Using 16 bits, two's complement can represent numbers from -32,768 to 32,767. The left most bit is a 0 if the number is positive or zero, and a 1 if the number is negative. Consequently, the left most bit is called the sign bit, just as in sign and magnitude representation. Converting between decimal and two's complement is straightforward for positive numbers, a simple decimal to binary conversion. For negative numbers, the following algorithm is often used: take the absolute value of the decimal number, convert it to binary, and complement all of the bits (ones become zeros and zeros become ones), add 1 to the binary number. Two's complement is hard for humans, but easy for digital electronics.

1.2.9 Real Numbers - Floating Point

The usual method used by computers to represent real numbers is floating point notation. The encoding scheme for floating point numbers is more complicated than for fixed point. There are many varieties of floating point notation and each has individual characteristics. The key concept is that, a real number is represented by a number called a mantissa, times a base raised to an integer power, called an exponent. The base is usually fixed, and the mantissa and exponent vary to represent different real numbers. For example, the number 387.53 could be represented as 38753×10-2. The mantissa is 38753, and the exponent is –2. Other possible representations are 0.38753×103 and 387.53×100. We choose the representation in which the mantissa is an integer with no trailing 0s.

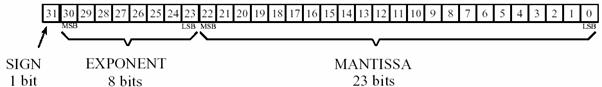

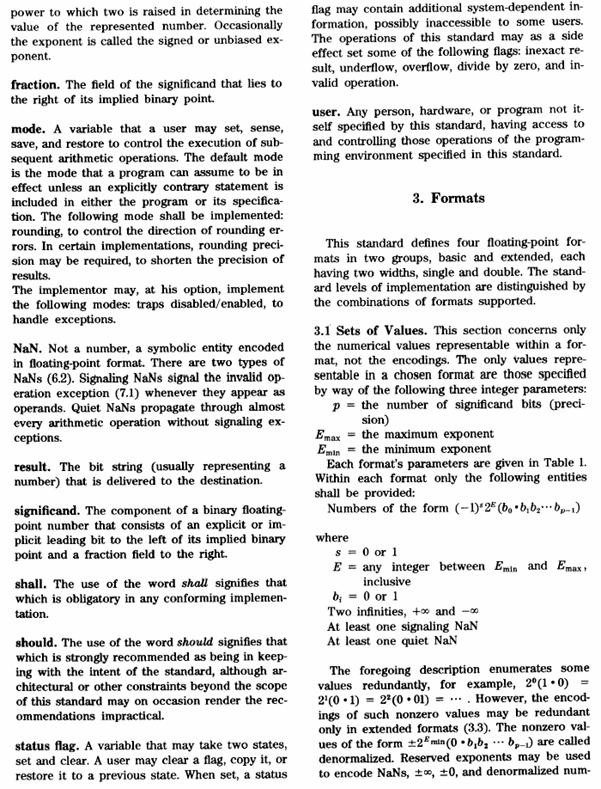

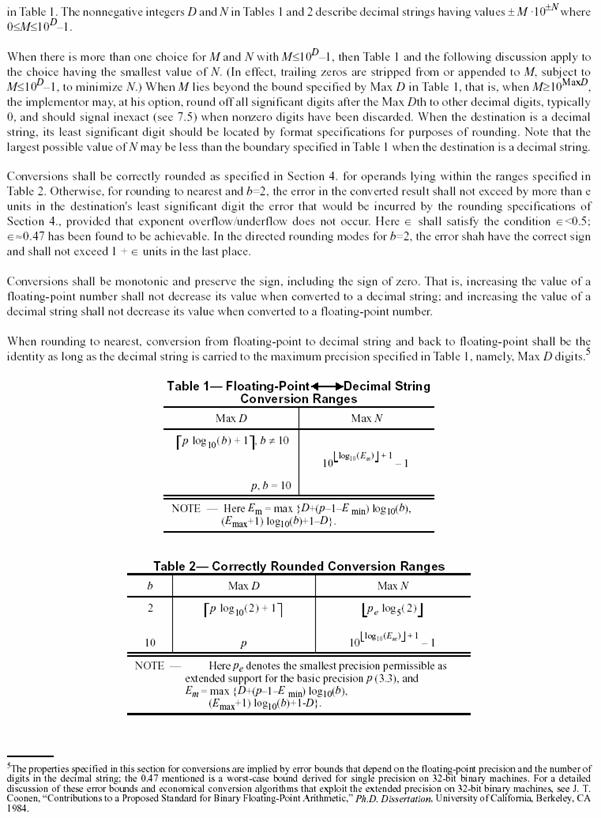

Floating point representation is similar to scientific notation, except everything is carried out in base two, rather than base ten. While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32 bit numbers called single precision, as well as 64 bit numbers called double precision. As shown in Figure 1‑3, the 32 bits used in single precision are divided into three separate groups: bits 0 through 22 form the mantissa, bits 23 through 30 form the exponent, and bit 31 is the sign bit.

Figure 1‑3: Single precision floating point bit pattern.

These bits form the floating-point number, v, by the following relation:

![]()

where S is the value of the sign bit, M is the value of the mantissa, and E is the value of the mantissa. The term (-1)S, simply means that the sign bit, S, is 0 for a positive number and 1 for a negative number. The variable, E, is the number between 0 and 255 represented by the eight exponent bits. Subtracting 127 from this number allows the exponent term to run from 2-127 to 2128. In other words, exponent is stored in offset binary with an offset of 127. The mantissa, M, is formed from the 23 bits as a binary fraction. For example, the decimal fraction: 2.783, is interpreted: 2 + 7/10 + 8/100 + 3/1000. The binary fraction: 1.0101 means: 1 + 0/2 + 1/4 + 0/8 + 1/16. Floating point numbers are normalized in the same way as scientific notation, that is, there is only one nonzero digit left of the decimal point (called a binary point in base 2). Since the only nonzero number that exists in base two is 1, the leading digit in the mantissa will always be a 1, and therefore does not need to be stored. Removing this redundancy allows the number to have an additional one bit of precision. The 23 stored bits, referred to by the notation: m22 m21 m20 … m0, form the mantissa according to:

![]()

In other words, M = 1 + m222-1 + m212-2+ m202-3…. If bits 0 through 22 are all zeros, M takes on the value of one. If bits 0 through 22 are all ones, M is just a hair under two, i.e., 2-2-23.

Using this encoding scheme, the largest number that can be represented is: ±(2-2-23)×2128 = ± 6.8 × 1038. Likewise, the smallest number that can be represented is: ± 1.0 × 2-127 = ± 5.9 × 10-39. The IEEE standard reduces this range slightly to free bit patterns that are assigned special meanings. In particular, the largest and smallest numbers allowed in the standard are ± 3.4 × 1038 and ± 1.2 × 10-38 respectively. The freed bit patterns allow three, special classes of numbers: ±0 is defined as all of the mantissa and exponent bits being zero. ± 4 is defined as all of the mantissa bits being zero, and all of the exponent bits being one. A group of very small unnormalized numbers between ±1.2×10-38 and ±1.4 × 10-45. These are lower precision numbers obtained by removing the requirement that the leading digit in the mantissa be a one. Besides these three special classes, there are bit patterns that are not assigned a meaning, commonly referred to as NANs (Not A Number).

The IEEE standard for double precision simply adds more bits to both the mantissa and exponent. Of the 64 bits used to store a double precision number, bits 0 through 51 are the mantissa, bits 52 through 62 are the exponent, and bit 63 is the sign bit. As before, the mantissa is between one and just under two. The 11 exponent bits form a number between 0 and 2047, with an offset of 1023, allowing exponents from 2-1023 to 21024. The largest and smallest numbers allowed are ±1.8×10308 and ±2.2×10-308 respectively. These are incredibly large and small numbers! It is quite uncommon to find an application where single precision is not adequate. You will probably never find a case where double precision limits what you want to accomplish.

1.2.10 Number Precision

The errors associated with number representation are very similar to quantization errors during ADC. You want to store a continuous range of values; however, you can represent only a finite number of quantized levels. Every time a new number is generated, after a math calculation for example, it must be rounded to the nearest value that can be stored in the format you are using.

As an example, imagine that you allocate 32 bits to store a number. Since there are exactly different bit patterns possible, you can 232 = 4,294,967,296 represent exactly 4,294,967,296 different numbers. Some programming languages allow a variable called a long integer, stored as 32 bits, fixed point, two's complement. This means that the 4,294,967,296 possible bit patterns represent the integers between -2,147,483,648 and 2,147,483,647. In comparison, single precision floating point spreads these 4,294,967,296 bit patterns over the much larger range: -3.4 × 1038 to 3.4 × 1038.

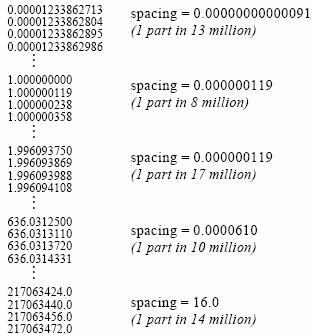

With fixed-point variables, the gaps between adjacent numbers are always exactly one. In floating point notation, the gaps between adjacent numbers vary over the represented number range. If we randomly pick a floating point number, the gap next to that number is approximately ten million times smaller than the number itself (to be exact, 2-24 to 2-23 times the number). This is a key concept of floating point notation: large numbers have large gaps between them, while small numbers have small gaps. Figure 1‑4 illustrates this by showing consecutive floating-point numbers, and the gaps that separate them.

Figure 1‑4: The gaps between adjacent numbers.

2 DSP Review

2.1 Basics

Digital Signal Processing (DSP) is used in a wide variety of applications, and it is hard to find a good definition that is general. We can start by dictionary definitions of the words. Digital is operating by the use of discrete signals to represent data in the form of numbers. Signal is a variable parameter by which information is conveyed through an electronic circuit. Processing is to perform operations on data according to programmed instructions. This leads us to a simple definition: Digital Signal processing is changing or analyzing information which is measured as discrete sequences of numbers. Note two unique features of Digital Signal processing as opposed to plain old ordinary digital processing: signals come from the real world - this intimate connection with the real world leads to many unique needs such as the need to react in real time and a need to measure signals and convert them to digital numbers, and signals are discrete - which means the information in between discrete samples is lost.

The advantages of DSP are common to many digital systems and include versatility, repeatability, and simplicity. Versatility: digital systems can be reprogrammed for other applications (at least where programmable DSP chips are used) and digital systems can be ported to different hardware (for example a different DSP chip or board level product). Repeatability: digital systems can be easily duplicated, digital systems do not depend on strict component tolerances, and digital system responses do not drift with temperature. Simplicity: some things can be done more easily digitally than with analogue systems.

Figure 2‑1: Applications of Digital Signal Processing.

DSP is used in a very wide variety of applications (Figure 2‑1) but most share some common features: they use a lot of maths (multiplying and adding signals), they deal with signals that come from the real world, and they require a response in a certain time. Where general purpose DSP processors are concerned, most applications deal with signal frequencies that are in the audio range.

2.2 Converting Analogue Signals

Most DSP applications deal with analogue signals where the analogue signal has to be converted to digital form as shown in Figure 2‑2. The analogue signal - a continuous variable defined with infinite precision - is converted to a discrete sequence of measured values which are represented digitally. Information is lost in converting from analogue to digital, due to: inaccuracies in the measurement, uncertainty in timing, and the limits on the duration of the measurement. These effects are called quantization errors.

Figure 2‑2: The analog to digital process of DSP.

Figure 2‑3: Digitization of an analog signal.

The continuous analogue signal has to be held before it can be sampled. Otherwise, the signal would be changing during the measurement. Only after it has been held can the signal be measured, and the measurement converted to a digital value (Figure 2‑3).

Figure 2‑4: Sampled signal.

The sampling results in a discrete set of digital numbers that represent measurements of the signal - usually taken at equal intervals of time (Figure 2‑4). Note that the sampling takes place after the hold. This means that we can sometimes use a slower Analogue to Digital Converter (ADC) than might seem required at first sight. The hold circuit must act fast enough that the signal is not changing during the time the circuit is acquiring the signal value, but the ADC has all the time that the signal is held to make its conversion. We don't know what we don't measure. In the process of measuring the signal, some information is lost (Figure 2‑5). Sometimes we may have some a priori knowledge of the signal, or be able to make some assumptions that will let us reconstruct the lost information.

Figure 2‑5: The loss of information during sampling.

2.3 Aliasing

We only sample the signal at intervals that we don't know what happened between the samples. A crude example is to consider a 'glitch' that happened to fall between adjacent samples. Since we don't measure it, we have no way of knowing the glitch was there at all.

Figure 2‑6: The aliasing artifact. Loss of information.

In a less obvious case, we might have signal components that are varying rapidly in between samples. Again, we could not track these rapid inter-sample variations (Figure 2‑6). Therefore, we must sample fast enough to see the most rapid changes in the signal. Sometimes we may have some a priori knowledge of the signal, or be able to make some assumptions about how the signal behaves in between samples. If we do not sample fast enough, we cannot track completely the most rapid changes in the signal.

Figure 2‑7: Sampling at slow rates.

Some higher frequencies can be incorrectly interpreted as lower ones. In Figure 2‑7, the high frequency signal is sampled just under twice every cycle. The result is that each sample is taken at a slightly later part of the cycle. If we draw a smooth connecting line between the samples, the resulting curve looks like a lower frequency. This is called 'aliasing' because one frequency looks like another.

Note that the problem of aliasing is that we cannot tell which frequency we have - a high frequency looks like a low one so we cannot tell the two apart. But sometimes we may have some a priori knowledge of the signal, or be able to make some assumptions about how the signal behaves in between samples, that will allow us to tell unambiguously what we have.

Nyquist showed that to distinguish unambiguously between all signal frequency components we must sample faster than twice the frequency of the highest frequency component.

Figure 2‑8: Sampling high frequency signal. A continuous signal sampled twice per cycle has enough information to be reconstructed.

In Figure 2‑8, the high frequency signal is sampled twice every cycle. If we draw a smooth connecting line between the samples, the resulting curve looks like the original signal. But if the samples happened to fall at the zero crossings, we would see no signal at all - this is why the sampling theorem demands we sample faster than twice the highest signal frequency. This avoids aliasing. The highest signal frequency allowed for a given sample rate is called the Nyquist frequency. Actually, Nyquist says that we have to sample faster than the signal bandwidth, not the highest frequency. But this leads us into multirate signal processing which is a more advanced subject.

2.4 Antialiasing

Nyquist showed that to distinguish unambiguously between all signal frequency components we must sample at least twice the frequency of the highest frequency component. To avoid aliasing, we simply filter out all the high frequency components before sampling (Figure 2‑9).

Figure 2‑9: The effect of antialiasing filter. High frequency components are removed through a low pass filter.

Note that antialias filters must be analogue - it is too late once you have done the sampling. This simple brute force method avoids the problem of aliasing. But it does remove information - if the signal had high frequency components, we cannot now know anything about them.

Although Nyquist showed that provide we sample at least twice the highest signal frequency we have all the information needed to reconstruct the signal, the sampling theorem does not say the samples will look like the signal.

Figure 2‑10: A high frequency signal sampled fast enough may still look wrong but can be reconstructed.

Figure 2‑10 shows a high frequency sine wave that is nevertheless sampled fast enough according to Nyquist's sampling theorem - just more than twice per cycle. When straight lines are drawn between the samples, the signal's frequency is indeed evident - but it looks as though the signal is amplitude modulated. This effect arises because each sample is taken at a slightly earlier part of the cycle. Unlike aliasing, the effect does not change the apparent signal frequency. The answer lies in the fact that the sampling theorem says there is enough information to reconstruct the signal - and the correct reconstruction is not just to draw straight lines between samples.

The signal is properly reconstructed from the samples by low pass filtering: the low pass filter should be the same as the original antialias filter. The reconstruction filter interpolates between the samples to make a smoothly varying analogue signal. In Figure 2‑11, the reconstruction filter interpolates between samples in a 'peaky' way that seems at first sight to be strange. The explanation lies in the shape of the reconstruction filter's impulse response.

Figure 2‑11: A sampled signal must be low-pass filtered to reconstruct the original.

The impulse response of the reconstruction filter has a classic 'sin(x)/x shape. The stimulus fed to this filter is the series of discrete impulses which are the samples. Every time an impulse hits the filter, we get 'ringing' - and it is the superposition of all these peaky rings that reconstructs the proper signal. If the signal contains frequency components that are close to the Nyquist, then the reconstruction filter has to be very sharp indeed. This means it will have a very long impulse response - and so the long 'memory' needed to fill in the signal even in region of the low amplitude samples.

2.5 Frequency resolution

Sampling of the signal is done only for a certain time. We cannot see slow changes in the signal if we don't wait long enough. In fact we must sample for long enough to detect not only low frequencies in the signal, but also small differences between frequencies. The length of time for which we are prepared to sample the signal determines our ability to resolve adjacent frequencies - the frequency resolution.

We must sample for at least one complete cycle of the lowest frequency we want to resolve. We can see that we face a forced compromise. We must sample fast to avoid and for a long time to achieve a good frequency resolution. But sampling fast for a long time means we will have a lot of samples - and lots of samples means lots of computation, for which we generally don't have time. So we will have to compromise between resolving frequency components of the signal, and being able to see high frequencies.

2.6 Quantization

When the signal is converted to digital form, the precision is limited by the number of bits available. Figure 2‑12 shows an analogue signal which is then converted to a digital representation - in this case, with 8 bit precision. The smoothly varying analogue signal can only be represented as a 'stepped' waveform due to the limited precision. Sadly, the errors introduced by digitization are both non linear and signal dependent. Non linear means we cannot calculate their effects using normal math. Signal dependent means the errors are coherent and so cannot be reduced by simple means.

This is a common problem in DSP. The errors due to limited precision (i.e. word length) are non linear (hence incalculable) and signal dependent (hence coherent). Both are bad news, and mean that we cannot really calculate how a DSP algorithm will perform in limited precision - the only reliable way is to implement it, and test it against signals of the type expected. The non linearity can also lead to instability - particularly with IIR filters. The word length of hardware used for DSP processing determines the available precision and dynamic range.

Figure 2‑12: Limited precision leads to errors which are signal dependent.

Figure 2‑13: Timing error leads to value error, an accurate dock leads to accurate values. An error in the dock translates the error in the values.

Uncertainty in the clock timing leads to errors in the sampled signal. Figure 2‑13 shows an analogue signal which is held on the rising edge of a clock signal. If the clock edge occurs at a different time than expected, the signal will be held at the wrong value. Sadly, the errors introduced by timing error are both non linear and signal dependent.

A real DSP system suffers from three sources of error due to limited word length in the measurement and processing of the signal: limited precision due to word length when the analogue signal is converted to digital form, errors in arithmetic due to limited precision within the processor itself, and limited precision due to word length when the digital samples are converted back to analogue form.

These errors are often called quantization error. The effects of quantization error are in fact both non linear and signal dependent. Non linear means we cannot calculate their effects using normal math. Signal dependent means that even if we could calculate their effect, we would have to do so separately for every type of signal we expect. A simple way to get an idea of the effects of limited word length is to model each of the sources of quantization error as if it were a source of random noise.

The model of quantization as injections of random noise is helpful in gaining an idea of the effects. But it is not actually accurate, especially for systems with feedback like IIR filters. The effect of quantization error is often similar to an injection of random noise.

Figure 2‑14: Quantization error. The spectrum of a pure tone is noisy when quantized.

Figure 2‑14 shows the spectrum calculated from a pure tone. The top plot shows the spectrum with high precision (double precision floating point) and the bottom plot shows the spectrum when the sine wave is quantized to 16 bits. The effect looks very like low level random noise. The signal to noise ratio is affected by the number of bits in the data format, and by whether the data is fixed point or floating point.

2.7 Summary

A DSP system has three fundamental sources of limitation:

- loss of information because we only take samples of the signal at intervals

- loss of information because we only sample the signal for a certain length of time

- errors due to limited precision (i.e. word length) in data storage and arithmetic

The effects of these limitations are as follows:

- aliasing is the result of sampling, which means we cannot distinguish between high and low frequencies

- limited frequency resolution is the result of limited duration of sampling, which means we cannot distinguish between adjacent frequencies

- quantization error is the result of limited precision (word length) when converting between analogue and digital forms, when storing data, or when performing arithmetic

Aliasing and frequency resolution are fundamental limitations - they arise from the mathematics and cannot be overcome. They are limitations of any sampled data system, not just digital ones.

Quantization error is an artifact of the imperfect precision, and can be improved upon by using an increased word length. It is a feature peculiar to digital systems. Its effects are non linear and signal dependent, but can sometimes be acceptably modeled as injections of random noise.

3 Introduction to Digital Signal Processors

3.1 How DSPs are Different from Other Microprocessors

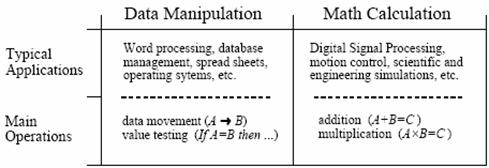

The last forty years have shown that computers are extremely capable in two broad areas, data manipulation, such as word processing and database management, and mathematical calculation, used in science, engineering, and Digital Signal Processing. All microprocessors can perform both tasks; however, it is difficult (expensive) to make a device that is optimized for both. There are technical tradeoffs in the hardware design, such as the size of the instruction set and how interrupts are handled. Even more important, there are marketing issues involved: development and manufacturing cost, competitive position, product lifetime, and so on. As a broad generalization, these factors have made traditional microprocessors, such as the Pentium, primarily directed at data manipulation. Similarly, DSPs are designed to perform the mathematical calculations needed in Digital Signal Processing.

Figure 3‑1: Data manipulation versus mathematical calculation.

Figure 3‑1 lists the most important differences between these two categories. Data manipulation involves storing and sorting information. For instance, consider a word processing program. The basic task is to store the information (typed in by the operator), organize the information (cut and paste, spell checking, page layout, etc.), and then retrieve the information (such as saving the document on a floppy disk or printing it with a laser printer). These tasks are accomplished by moving data from one location to another, and testing for inequalities (A=B, A<B, etc.). As an example, imagine sorting a list of words into alphabetical order. Each word is represented by an 8 bit number, the ASCII value of the first letter in the word. Alphabetizing involved rearranging the order of the words until the ASCII values continually increase from the beginning to the end of the list. This can be accomplished by repeating two steps over-and-over until the alphabetization is complete. First, test two adjacent entries for being in alphabetical order (IF A>B THEN ...). Second, if the two entries are not in alphabetical order, switch them so that they are (A«B). When this two-step process is repeated many times on all adjacent pairs, the list will eventually become alphabetized.

As another example, consider how a document is printed from a word processor. The computer continually tests the input device (mouse or keyboard) for the binary code that indicates, "print the document." When this code is detected, the program moves the data from the computer's memory to the printer. Here we have the same two basic operations: moving data and inequality testing. While mathematics is occasionally used in this type of application, it is infrequent and does not significantly affect the overall execution speed.

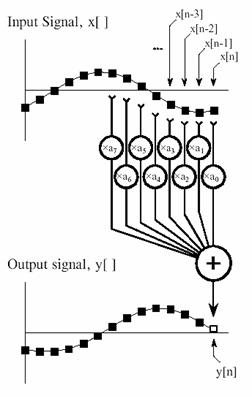

Figure 3‑2: FIR digital filter. Each sample in the output signal is found by multiplying samples from the input signal by the kernel coefficients and summing the products.

In comparison, the execution speed of most DSP algorithms is limited almost completely by the number of multiplications and additions required. For example, Figure 3‑2 shows the implementation of an FIR digital filter, the most common DSP technique. Using the standard notation, the input signal is referred to by x[ ], while the output signal is denoted by y[ ]. Our task is to calculate the sample at location n in the output signal. An FIR filter performs this calculation by multiplying appropriate samples from the input signal by a group of coefficients, denoted by a0, a1, a2, a3,…, and then adding the products. This is simply saying that the input signal has been convolved with a filter kernel consisting of a0, a1, a2, a3,…. Depending on a0, a1, a2, a3... the application, there may only be a few coefficients in the filter kernel, or many thousands. While there is some data transfer and inequality evaluation in this algorithm, such as to keep track of the intermediate results and control the loops, the math operations dominate the execution time.

In addition to performing mathematical calculations very rapidly, DSPs must also have a predictable execution time. Suppose you launch your desktop computer on some task, say, converting a word-processing document from one form to another. It does not matter if the processing takes ten milliseconds or ten seconds; you simply wait for the action to be completed before you give the computer its next assignment.

In comparison, most DSPs are used in applications where the processing is continuous, not having a defined start or end. For instance, consider an engineer designing a DSP system for an audio signal, such as a hearing aid. If the digital signal is being received at 20,000 samples per second, the DSP must be able to maintain a sustained throughput of 20,000 samples per second. However, there are important reasons not to make it any faster than necessary. As the speed increases, so does the cost, the power consumption, the design difficulty, and so on. This makes an accurate knowledge of the execution time critical for selecting the proper device, as well as the algorithms that can be applied.

3.2 Characteristics of DSP processors

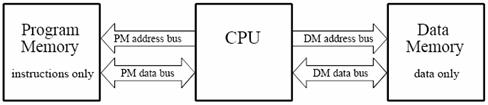

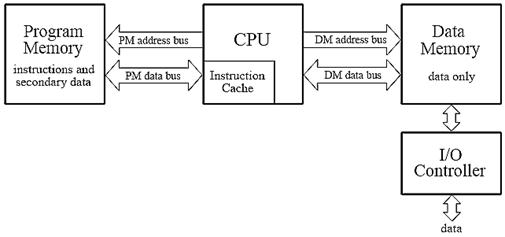

Although there are many DSP processors, they are mostly designed with the same few basic operations in mind, that they share the same set of basic characteristics. These characteristics fall into three categories:

- SSpecialized high speed arithmetic

- DData transfer to and from the real world

- MMultiple access memory architectures

Figure 3‑3: Typical DSP operations require specific functions.

Typical DSP operations require a few specific operations. Figure 3‑3 shows an FIR filter and illustrates the basic DSP operations: additions and multiplications, delays, and array handling. Each of these operations has its own special set of requirements. Additions and multiplications require us to fetch two operands, perform the addition or multiplication (usually both), store the result, or hold it for a repetition. Delays require us to hold a value for later use. Array handling requires us to fetch values from consecutive memory locations, and copy data from memory to memory. To suit these fundamental operations DSP processors often have parallel multiply and add, multiple memory accesses (to fetch two operands and store the result), lots of registers to hold data temporarily, efficient address generation for array handling, and special features such as delays or circular addressing.

3.2.1 Circular Buffering

Digital Signal Processors are designed to quickly carry out FIR filters and similar techniques. To understand the hardware, we must first understand the algorithms. To start, we need to distinguish between off-line processing and real-time processing. In off-line processing, the entire input signal resides in the computer at the same time. For example, a geophysicist might use a seismometer to record the ground movement during an earthquake. After the shaking is over, the information may be read into a computer and analyzed in some way. Another example of off-line processing is medical imaging, such as CT and MRI. The data set is acquired while the patient is inside the machine, but the image reconstruction may be delayed until a later time. The key point is that all of the information is simultaneously available to the processing program. This is common in scientific research and engineering, but not in consumer products. Off-line processing is the realm of personal computers and mainframes.

(a) (b)

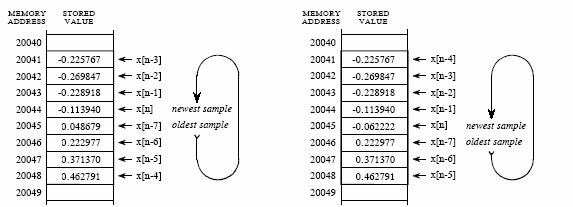

Figure 3‑4: Circular buffer operation. a. Circular buffer at some instant and b. Circular buffer after next sample.

In real-time processing, the output signal is produced at the same time that the input signal is being acquired. For example, this is needed in telephone communication, hearing aids, and radar. These applications must have the information immediately available, although it can be delayed by a short amount. For instance, a 10-millisecond delay in a telephone call cannot be detected by the speaker or listener. Likewise, it makes no difference if a radar signal is delayed by a few seconds before being displayed to the operator. Real-time applications input a sample, perform the algorithm, and output a sample, over-and-over. Alternatively, they may input a group of samples, perform the algorithm, and output a group of samples. This is the world of Digital Signal Processors.

Now imagine that the FIR filter in Figure 3‑2 being implemented in real-time. To calculate the output sample, we must have access to a certain number of the most recent samples from the input. For example, suppose we use eight coefficients in this filter. This means we must know the value of the eight most recent samples from the input signal. These eight samples must be stored in memory and continually updated as new samples are acquired. What is the best way to manage these stored samples? The answer is circular buffering. Figure 3‑4 illustrates an eight sample circular buffer. We have placed this circular buffer in eight consecutive memory locations, 20041 to 20048. Figure 3‑4 (a) shows how the eight samples from the input might be stored at one particular instant in time, while (b) shows the changes after the next sample is acquired. The idea of circular buffering is that the end of this linear array is connected to its beginning; memory location 20041 is viewed as being next to 20048, just as 20044 is next to 20045. You keep track of the array by a pointer that indicates where the most recent sample resides. For instance, in (a) the pointer contains the address 20044, while in (b) it contains 20045. When a new sample is acquired, it replaces the oldest sample in the array, and the pointer is moved one address ahead. Circular buffers are efficient because only one value needs to be changed when a new sample is acquired.

3.2.2 Mathematics

To perform the simple arithmetic required, DSP processors need special high-speed arithmetic units. Most DSP operations require additions and multiplications together. So DSP processors usually have hardware adders and multipliers which can be used in parallel within a single instruction. Figure 3‑5 shows the data path for the Lucent DSP32C processor. The hardware multiply and add work in parallel so that in the space of a single instruction, both an add and a multiply can be completed.

Figure 3‑5: The data path for the Lucent DSP32C processor.

Delays require that intermediate values be held for later use. This may also be a requirement, for example, when keeping a running total - the total can be kept within the processor to avoid wasting repeated reads from and writes to memory. For this reason DSP processors have lots of registers which can be used to hold intermediate values. Registers may be fixed point or floating point format.

Array handling requires that data can be fetched efficiently from consecutive memory locations. This involves generating the next required memory address. For this reason DSP processors have address registers which are used to hold addresses and can be used to generate the next needed address efficiently:

The ability to generate new addresses efficiently is a characteristic feature of DSP processors. Usually, the next needed address can be generated during the data fetch or store operation, and with no overhead. DSP processors have rich sets of address generation operations.

|

*rP |

register indirect |

Read the data pointed to by the address in register rP |

|

*rP++ |

post increment |

Having read the data, post increment the address pointer to point to the next value in the array |

|

*rP-- |

post decrement |

Having read the data, post decrement the address pointer to point to the previous value in the array |

|

*rP++rI |

register post increment |

Having read the data, post increment the address pointer by the amount held in register rI to point to rI values further down the array |

|

*rP++rIr |

bit reversed |

Having read the data, post increment the address pointer to point to the next value in the array, as if the address bits were in bit reversed order |

Figure 3‑6: Addressing modes for the Lucent DSP32C processor.

Figure 3‑6 shows some addressing modes for the Lucent DSP32C processor. The assembler syntax is very similar to C language. Whenever an operand is fetched from memory using register indirect addressing, the address register can be incremented to point to the next needed value in the array. This address increment is free - there is no overhead involved in the address calculation - and in the case of the Lucent DSP32C, processor up to three such addresses may be generated in each single instruction. Address generation is an important factor in the speed of DSP processors at their specialized operations.

The last addressing mode - bit reversed - shows how specialized DSP processors can be. Bit reversed addressing arises when a table of values has to be reordered by reversing the order of the address bits:

- reverse the order of the bits in each address

- shuffle the data so that the new, bit reversed, addresses are in ascending order

This operation is required in the Fast Fourier Transform - and just about nowhere else. So one can see that DSP processors are designed specifically to calculate the Fast Fourier Transform efficiently.

3.2.3 Input and Output Interfaces

In addition to the mathematics, in practice DSP is mostly dealing with the real world. Although this aspect is often forgotten, it is of great importance and marks some of the greatest distinctions between DSP processors and general-purpose microprocessors.

In a typical DSP application, the processor will have to deal with multiple sources of data from the real world (Figure 3‑7). In each case, the processor may have to be able to receive and transmit data in real time, without interrupting its internal mathematical operations. There are three sources of data from the real world:

- Signals coming in and going out

- Communication with an overall system controller of a different type

- Communication with other DSP processors of the same type

These multiple communications routes mark the most important distinctions between DSP processors and general-purpose processors. The need to deal with these different sources of data efficiently leads to special communication features on DSP processors:

Figure 3‑7: Communication of DSP with overall system controllers.

When DSP processors first came out, they were rather fast processors: for example the first floating point DSP - the AT&T DSP32 - ran at 16 MHz at a time when PC computer clocks were 5 MHz. This meant that we had very fast floating point processors: a fashionable demonstration at the time was to plug a DSP board into a PC and run a fractal (Mandelbrot) calculation on the DSP and on a PC side by side. The DSP fractal was of course faster. Today, however, the fastest DSP processor is the Texas TMS320C6201, which runs at 200 MHz. This is no longer very fast compared with an entry level PC. In addition, the same fractal today will actually run faster on the PC than on the DSP. However, DSP processors are still used - why? The answer lies only partly in that the DSP can run several operations in parallel: a far more basic answer is that the DSP can handle signals very much better than a Pentium. Try feeding eight channels of high quality audio data in and out of a Pentium simultaneously in real time, without affecting the processor performance, if you want to see a real difference.

Figure 3‑8: The four activities on a synchronous serial port.

Signals tend to be fairly continuous, but at audio rates or not much higher. They are usually handled by high-speed synchronous serial ports. Serial ports are inexpensive - having only two or three wires - and are well suited to audio or telecommunications data rates up to 10 Mbit/s. Most modern speech and audio analogue to digital converters interface to DSP serial ports with no intervening logic. A synchronous serial port requires only three wires: clock, data, and word sync (Figure 3‑8). The addition of a fourth wire (frame sync) and a high impedance state when not transmitting makes the port capable of Time Division Multiplex (TDM) data handling, which is ideal for telecommunications:

DSP processors usually have synchronous serial ports - transmitting clock and data separately - although some, such as the Motorola DSP56000 family, have asynchronous serial ports as well (where the clock is recovered from the data). Timing is versatile, with options to generate the serial clock from the DSP chip clock or from an external source. The serial ports may also be able to support separate clocks for receive and transmit - a useful feature, for example, in satellite modems where the clocks are affected by Doppler shifts. Most DSP processors also support companding to A-law or mu-law in serial port hardware with no overhead - the Analog Devices ADSP2181 and the Motorola DSP56000 family does this in the serial port, whereas the Lucent DSP32C has a hardware compander in its data path instead.

The serial port will usually operate under DMA - data presented at the port is automatically written into DSP memory without stopping the DSP - with or without interrupts. It is usually possible to receive and transmit data simultaneously.

The serial port has dedicated instructions which make it simple to handle. Because it is standard to the chip, this means that many types of actual I/O hardware can be supported with little or no change to code - the DSP program simply deals with the serial port, no matter to what I/O hardware this is attached.

Host communications is an element of many, though not all, DSP systems. Many systems will have another, general purpose, processor to supervise the DSP: for example, the DSP might be on a PC plug-in card or a VME card - simpler systems might have a microcontroller to perform a 'watchdog' function or to initialize the DSP on power up. Whereas signals tend to be continuous, host communication tends to require data transfer in batches - for instance to download a new program or to update filter coefficients. Some DSP processors have dedicated host ports which are designed to communicate with another processor of a different type, or with a standard bus. For instance the Lucent DSP32C has a host port which is effectively an 8 bit or 16 bit ISA bus: the Motorola DSP56301 and the Analog Devices ADSP21060 have host ports which implement the PCI bus.

The host port will usually operate under DMA - data presented at the port is automatically written into DSP memory without stopping the DSP - with or without interrupts. It is usually possible to receive and transmit data simultaneously.

The host port has dedicated instructions, which make it simple to handle. The host port imposes a welcome element of standardization to plug-in DSP boards - because it is standard to the chip, it is relatively difficult for individual designers to make the bus interface different. For example, of the 22 main different manufacturers of PC plug-in cards using the Lucent DSP32C, 21 are supported by the same PC interface code: this means it is possible to swap between different cards for different purposes, or to change to a cheaper manufacturer, without changing the PC side of the code. Of course this is not foolproof - some engineers will always 'improve upon' a standard by making something incompatible if they can - but at least it limits unwanted creativity.

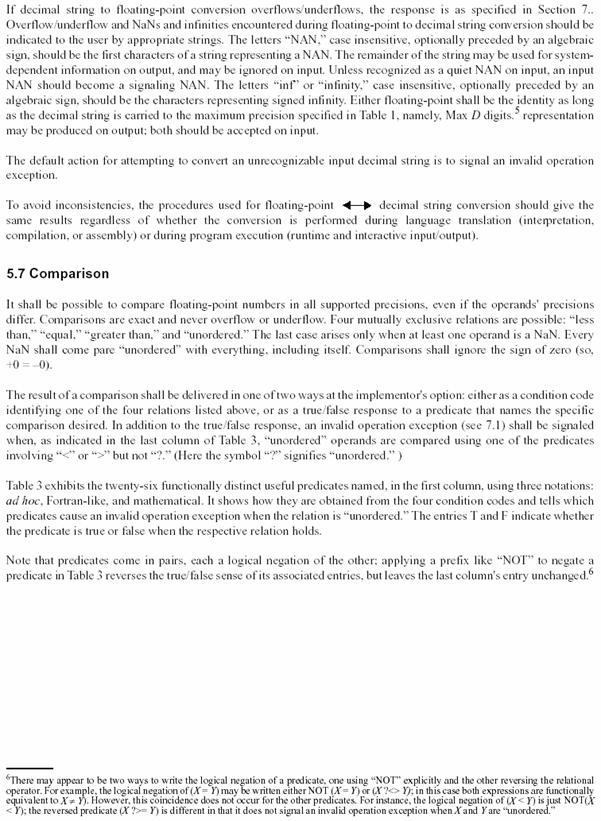

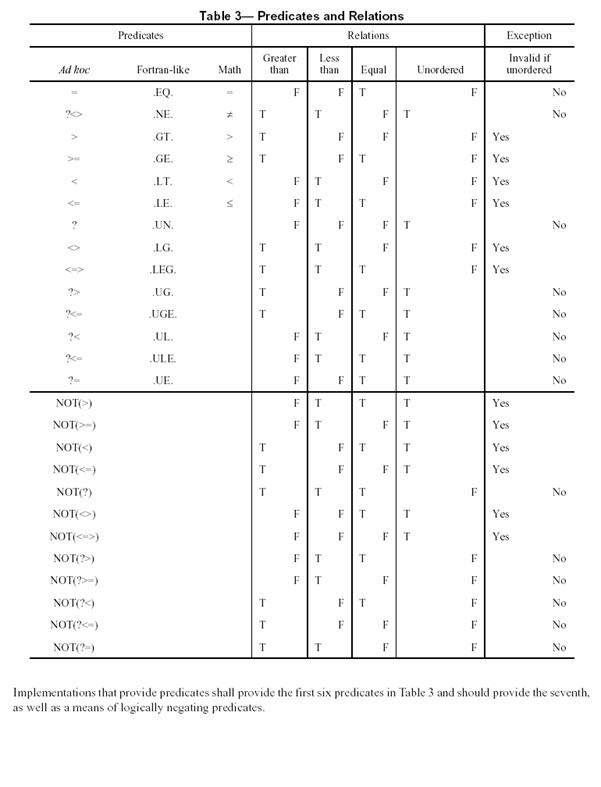

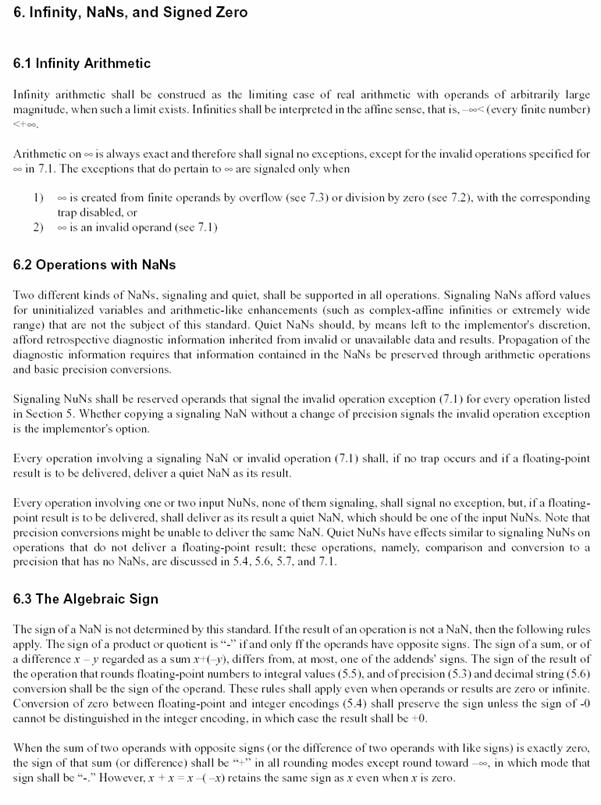

Interprocessor communications is needed when a DSP application is too much for a single processor - or where many processors are needed to handle multiple but connected data streams. Link ports provide a simple means to connect several DSP processors of the same type. The Texas TMS320C40 and the Analog Devices ADSP21060 both have six link ports (called 'comm. ports' for the 'C40). These would ideally be parallel ports at the word length of the processor, but this would use up too many pins (six ports each 32 bits wide=192, which is a lot of pins even if we neglect grounds). So a hybrid called serial/parallel is used: in the 'C40, comm. ports are 8 bits wide and it takes four transfers to move one 32 bit word - in the 21060, link ports are 4 bits wide and it takes 8 transfers to move one 32 bit word.